Learn Docker in the AI Era: Essential Commands & Cheat Sheet

Why Docker Still Matters in 2025

Picture this: you’re in a team meeting, the application crashes, and everyone scrambles to fix it. In minutes, questions fly: “Does it work on your machine?” or “Maybe it’s a dependency issue?”.

With Docker, those excuses vanish. In seconds, you can spin up the same environment anywhere—locally, in the cloud, or on AI pipelines.

Even in the era of AI-generated code, Docker remains a critical skill. AI can draft a Dockerfile for you, but only you can understand, optimize, and debug it when things break.

The Origin and Relevance of Docker

- Released in 2013, Docker introduced a breakthrough: consistent, portable apps across environments.

- It reshaped DevOps and enabled the rise of microservices.

- Today, it’s at the heart of cloud-native development and AI infrastructure—from ML training pipelines to scalable inference services.

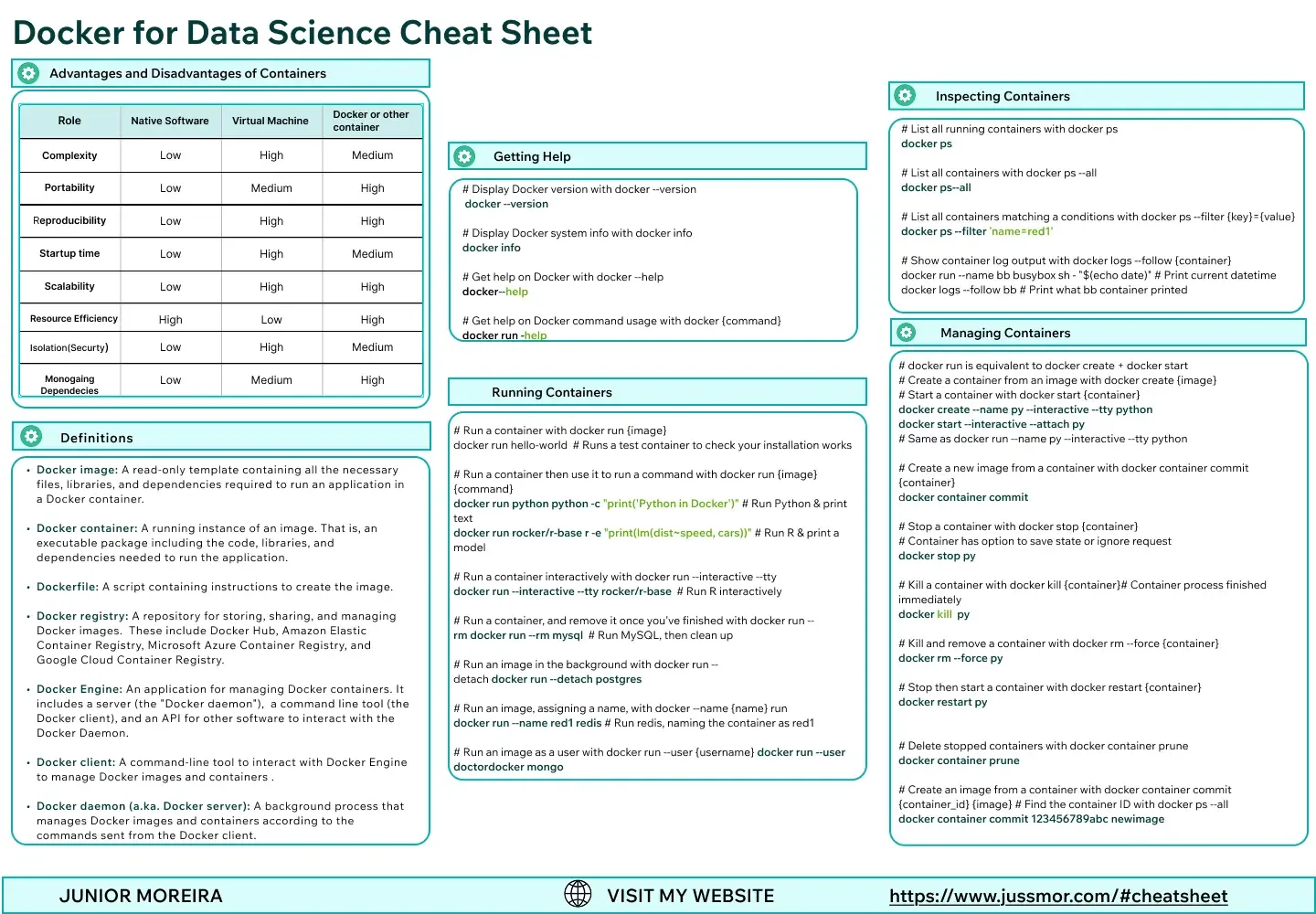

Core Components of Docker

Think of Docker like building blocks:

- Images: Read-only templates defining what goes into a container (OS, dependencies, app code).

- Containers: Running instances of images.

- Dockerfile: Blueprint for building images.

- Volumes: Persistent data storage.

- Networks: Enable container-to-container communication.

- Registry: Repositories to store and share images (e.g., Docker Hub).

Basic Docker Commands You’ll Use Daily

Check installation

docker --versionRun your first container:

docker run hello-worldList containers:

docker ps -aBuild an image from a Dockerfile:

docker build -t my-app .Run an app container with ports:

docker run -d -p 3000:3000 my-appManage containers and images:

docker stop <container_id>

docker rm <container_id>

docker rmi <image_id>Advanced Docker Concepts Worth Mastering

- Docker Compose: Orchestrate multiple containers with a single

docker-compose.yml. - Networking: Build private networks to connect microservices.

- Shared Volumes: Persist and share data across containers.

- Multi-stage builds: Optimize image size and security.

- Kubernetes integration: Orchestrate containers at scale.

- Best practices: Minimize base images, scan vulnerabilities, manage secrets.

# docker-compose.yml

services:

web:

build: ./web

ports:

- "3000:3000"

environment:

DATABASE_URL: postgres://postgres:postgres@db:5432/app

depends_on:

db:

condition: service_healthy

db:

image: postgres:16-alpine

environment:

POSTGRES_DB: app

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

volumes:

- db-data:/var/lib/postgresql/data

healthcheck:

test: ["CMD-SHELL", "pg_isready -U postgres -d app"]

interval: 5s

timeout: 3s

retries: 5

volumes:

db-data:Docker + AI: Real-World Scenarios

This is where it gets exciting. AI isn’t replacing Docker. it’s accelerating how you use it:

- AI-generated Dockerfiles: Tools like ChatGPT can draft configs, while you refine them for production.

- ML pipelines in Docker: Package TensorFlow or PyTorch environments for reproducible training.

- Model deployment: Serve an AI model in a container with an API (

docker run -p 8080:8080 model-service). - Scaling inference: Pair Docker with Kubernetes to handle thousands of predictions per second.

Recommended Resources

- 📄 Download my Docker Cheat Sheet (Download the Cheat Sheet HERE!)

Conclusion

Docker isn’t just another tool it’s the universal language of infrastructure. Mastering it enables you to:

- Build portable environments for development and production.

- Power AI and ML workflows with reproducibility.

- Scale apps in seconds.

- Use AI to generate Docker configurations, while relying on your own knowledge to debug and optimize.

If SQL is the universal language of data, Docker is the universal language of infrastructure.